Imagine a world where humans interact with various forms of advanced AI throughout their days. Ideally, this technology will help the humans who created it. What if our new toys can’t be controlled, though? We are very close to this chaotic future becoming our daily reality.

Over the past few years, the arrival and proliferation of artificial intelligence has become a huge story. AI is rapidly becoming more advanced, and it shows no signs of slowing down. While this technology killing or harvesting humans like in the Terminator or Matrix movies hasn’t happened yet, AI has already proven itself to be alarmingly disruptive.

The Academic World Grapples with the AI Tsunami

Artificial Intelligence can address some of the most substantial educational challenges of the present day. It can innovate teaching and learning processes and speed advancement toward Goal 4 of Sustainable Development, which focuses on Quality Education.

However, fast technical developments invariably carry considerable stakes and challenges. While Generative Artificial Intelligence is preferred for perks like time management and free study resources, it also makes cheating easier and kills originality.

According to the Global Student Survey of 2023, 40% of the student participants have stated that they have used AI for academic purposes. And the number will keep increasing.

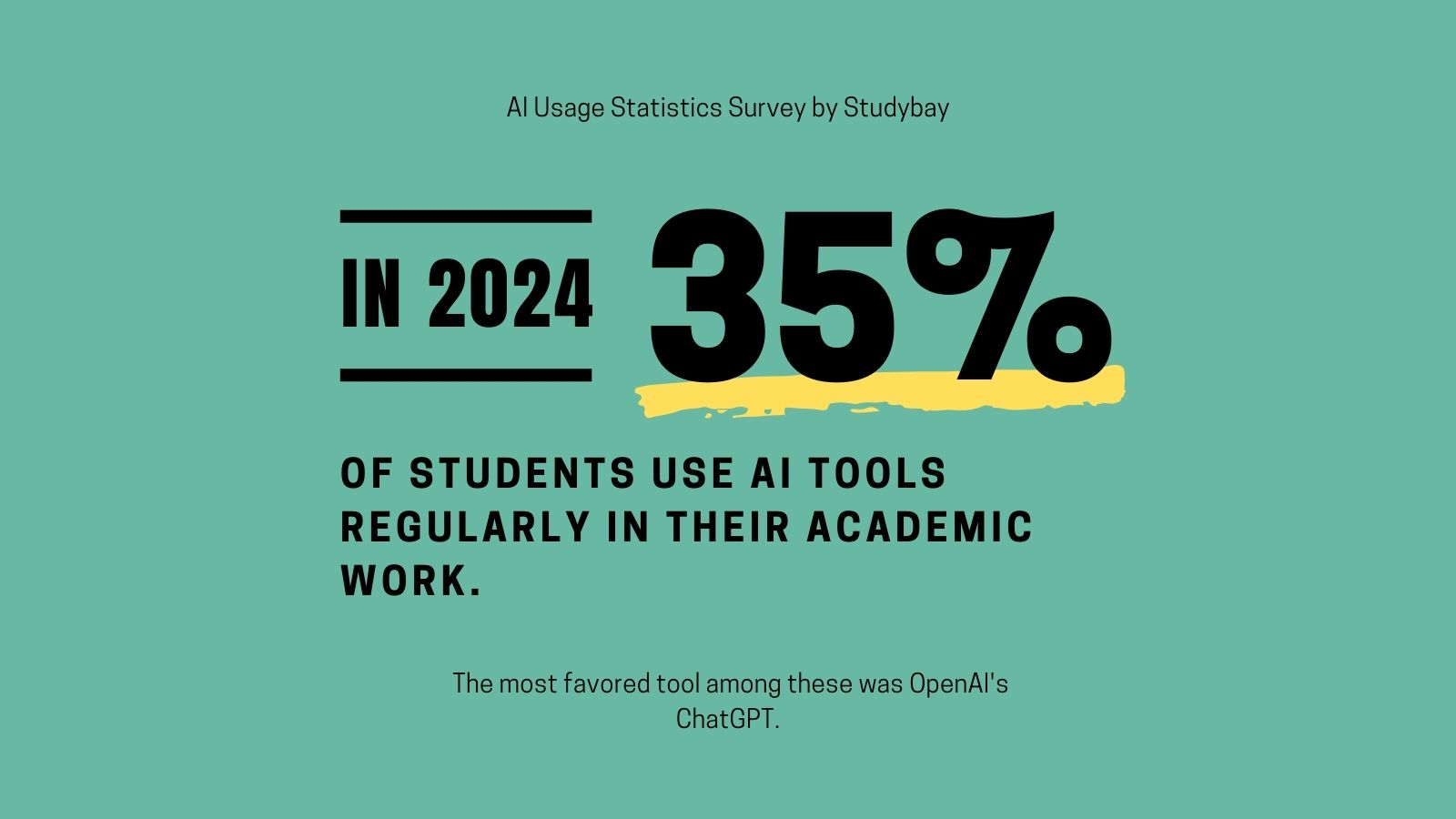

Studybay, an online platform for learners and professionals, conducted a survey in 2024 to understand how students interact with AI. They surveyed 1000 participants from all over the country, between the ages of 18 to 32, enrolled in graduate, postgraduate or doctoral programs. The research showed a significant preference for integrating artificial intelligence into their academic endeavors:

- AI tools are regularly employed by 35% of students for their academic tasks.

- 22% of the students surveyed confessed to using ChatGPT or similar AI programs to help with exams and quizzes.

- Studies show that 75% of individuals who use generative AI regularly have seen an increase in their grades.

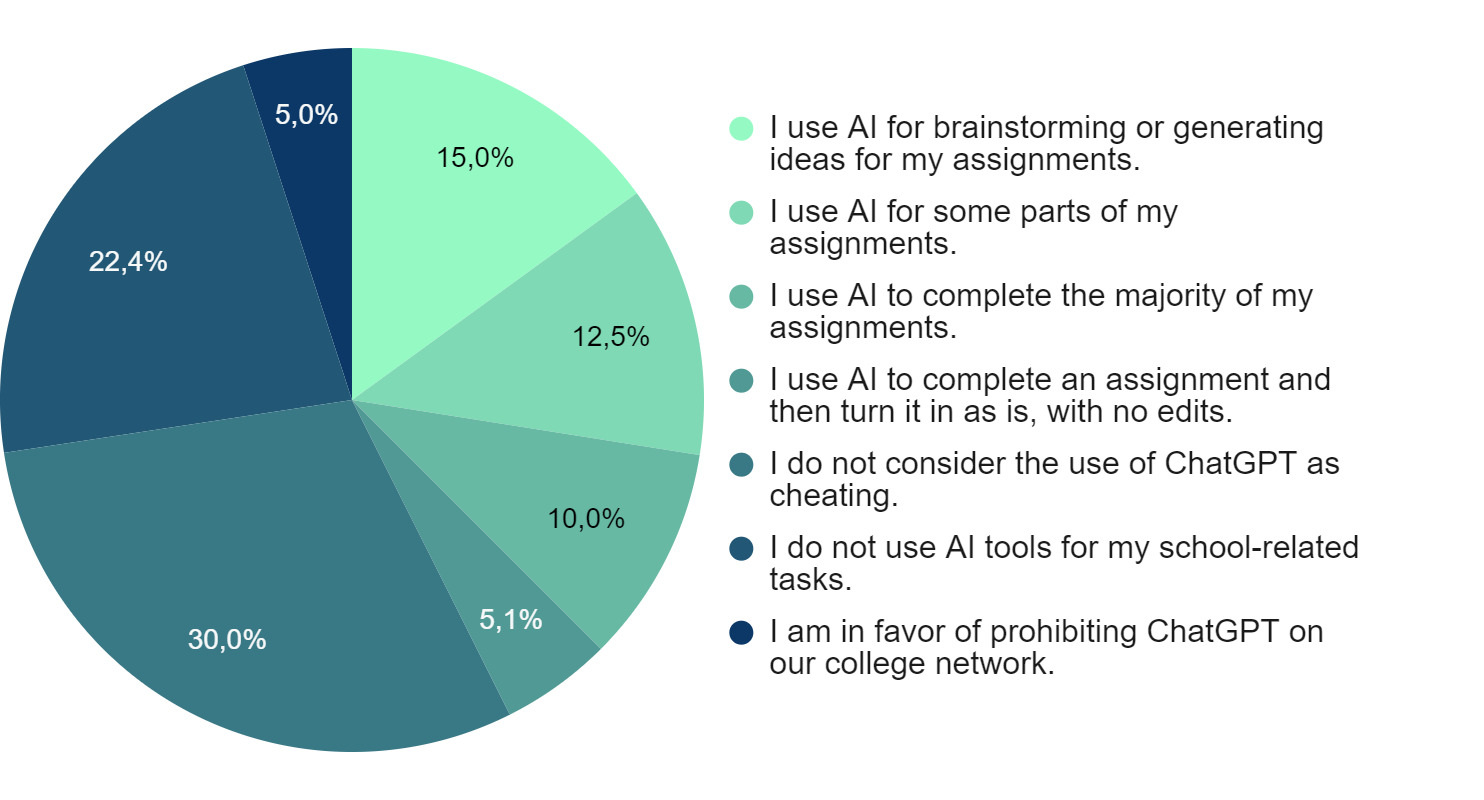

Students from different fields were a part of this survey. Marketing and psychology majors were most likely to use AI in their assignments. On the other hand, students from accounting and medical backgrounds were most likely to refrain from using AI.

These findings make sense, because AI tools don’t provide useful outcomes for accounting assignments, and medical school assignments carry more ethical considerations. OpenAI’s ChatGPT, undoubtedly the most popular tool among AI users, was the most favored by the students in the study sample.

What is more worrying is that nearly 30% of respondents had one similar viewpoint—that the use of AI tools like ChatGPT should not be considered ‘cheating’. While only 5% of respondents said that they have not used AI in any form, most others do say that they have used AI in some form, whether it be for inspiration, brainstorming ideas, or completing their assignments.

AI-Powered Novel Shocks Literary World, Wins Akutagawa Prize

College students faking essays is just at the top of the iceberg. And it’s not just students, but authors too who have jumped on the AI. Rie Kudan, a Japanese author, won the Akutagawa Prize in 2024 for her novel the Tokyo Tower of Sympathy. It is one of the most prestigious literary awards in Japan.

In her acceptance speech, she revealed that ChatGPT played an important role in helping her write her novel, and —get ready for this —she plans to continue using ChatGPT whenever she is stuck.

In fact, in this example, she is openly acknowledging the role of AI. She has even given credit to generative AI in her work! Now, there are many arguments about the morality and ethics in this scenario. But one thing is clear: it is no longer black and white. This moral gray area is just one example of how AI has entered various spaces in our life—officers, homes, schools, and even the writer’s desk.

AI's Illusion Exposed: Fraudulent Artistry Infiltrates Top Scientific Journals

The literary world is far from the only aspect of society or modern culture that is being rocked by generative AI. AI-generated images that were published in a peer-reviewed scientific journal started getting attention on X.

The images depicted scientific concepts in visual form. The individuals who gave the AI the prompts to create these images knew the pictures that it was creating had virtually no scientific value, yet they were still able to get them published. Once the pictures started making the rounds on social media, the article was quickly retracted.

The danger exposed by these events is that AI can falsify images and make them seem worthy of appearing in the most highly regarded, peer-reviewed scientific journals, but what is being depicted is fraudulent.

Deepfake Onslaught: AI Frauds Soar, Threatening Security and Swinging Votes

Deepfakes are perhaps one of the more popular AI-enabled attacks. In fact, according to SumSub’s Identity Fraud Report of 2023, deepfake fraud attempts increased by 3,000% last year.

Deepfake combines “deep learning” and “fake media” and manipulates AI to formulate audio or visual media that seems genuine. One of the examples of deepfake technology being used for criminal activity occurred in a sophisticated $35 million bank heist. Fraudsters managed to clone the voice of a bank director using deepfake audio technology, convincing employees to transfer the funds.

This incident not only showcases the advanced capabilities of deepfake technology but also highlights the severe financial and security risks it poses. This technology is commonly used to create non-consensual pornography of celebrities, which accounts for approximately 96% of deepfakes found on the internet. Spreading and circulating political misinformation is another prominent instance of such cyberattacks.

AI trickery poses major challenges in election seasons. Several major companies, including Amazon, Microsoft (News - Alert), and Google, have admitted that AI is capable of creating content during the most critical parts of election cycles that can potentially sway voter opinion. The problem is that the images and other materials the AI is creating are deceptive or outright fraudulent, but they can change the way a voter might feel about a political candidate.

For instance, AI could be asked to create pictures that show candidates in compromising positions, but those images are completely falsified. The companies that signed on to attempt to combat this technology might be doing so in good faith, but what they can actually do is decidedly limited.

Experts who are versed in the types of generative AI that are flooding the market right now feel that every election cycle, fraudulent content meant to discredit political candidates will become more common. Easily fooled voters might fall for these tactics, and that can sway the outcome of elections the world over.

ChatGPT and the Next Wave of Cyber Attacks

IT insiders are sounding the alarm: the digital realm may soon witness its first major cyber onslaught, masterminded through the capabilities of ChatGPT.

ChatGPT is one of the most prominent and well-known open AI technology tools that has become available to the general public. As one might expect, the public has been experimenting with it. While some of that experimentation is relatively harmless, certain bad actors have been modifying it with the eventual intention of using it to carry out cyber attacks.

Such attacks could be perpetrated against business entities or individuals. Someone with an axe to grind and the technological know-how to program ChatGPT correctly could implement an attack against an individual or company who they feel wronged them.

They might not even do so because of a perceived insult or injury. They may commit such an attack with profit in mind or simply because they want to cause chaos and anarchy.

Going with the Flow

But that’s not to say we should stop using AI. When computers first came around, people were wary of them, thinking they would cut jobs. And while that was true to some extent, it only changed how jobs were done. AI's likely to do the same, reshaping our jobs in exciting new ways, not just taking them away.

- AI Revolutionizes Marketing

AI automates jobs like lead generation, scoring, and client retention for many companies. With AI’s assistance, marketers can single out possible consumers and interact with them at the best time when they are most likely to react positively to marketing statements and that too with the most relevant products and services.

- In 2022, IBM (News - Alert) found that 23% of marketing professionals used AI to work before OpenAI released ChatGPT and significantly reduced the cost of such tools. Organisations are now acknowledging the importance of turning to AI to keep up with a market that’s faster than ever.

- In fact, statistics say that around 88% of marketers acknowledge that their company must expand its use of AI to meet client expectations and stay competitive and relevant in the market.

- Moreover, according to a 2023 study, 61.4% of people have already started using AI in their marketing campaigns. In practice, activities such as content production, AI-driven ad campaigns, personalizing email subject lines and predictive analysis are being dominated by the new technology.

As AI tools become smarter and more efficient, the extent of AI integration will surely increase in the coming years.

- Efficiency on Wheels: AI in Transportation

Transportation is one sector that AI has undoubtedly transformed by making autonomous cars safer. Self-driving cars are just the beginning of how far this technology can ride. A 2023 report shows that the global AI in transportation market size is valued at USD 3 billion, and is projected to grow at an annual rate of 22% by 2032.

Trains are also experiencing significant changes due to the rise in AI, with the arrival of automation of train operation (ATO). There are different grades of automation (GoA), with levels from 1 to 4, and level 4 eliminates the need for any human operators on board entirely.

One of the major benefits of AI tools is the expectation that they will eliminate human error. But, ATO tracks have still witnessed several mishaps, such as the 2019 Tsuen Wan Line crash in Hong Kong, the 2021 Kelana Jaya LRT collision in Kuala Lumpur in which 213 people were injured, and most recently, the mishap at the Qi'an Road station of Line 15 in Shanghai where an elderly person was fatally injured.

While we’re still in the early stages of AI tools taking over automated tasks, these incidents show that even the most advanced systems are not completely error-free.

- AI in Healthcare: The Future of Wellness

It may seem far-fetched, but AI healthcare is already modifying how humans engage with medical providers. A 2023 report shows that the AI in healthcare market is worth USD 11 billion!

Owing to its extensive data breakdown abilities, AI assists in identifying diseases, ailments, and illnesses with the most speed and accuracy. So, a healthy dose of AI has helped quicken the process of drug discovery and caring for patients through virtual medical assistants.

Robotic surgery will also receive a major boost in the coming years, with AI-centric corrective surgery expected to grow by a CAGR of 39.2% by 2030. Tech giants such as IBM, Microsoft, NVIDIA (News - Alert), DeepMind, and Intel are the major players to watch. They are pushing the boundaries by incorporating AI into medicine.

What it Means for Academia

AI is here to stay - that's for sure. This means every field, especially academia, must find ways to work with it. Let's take a look at what the increase in AI tools means for academia in the future.

Coursework Tailored to Individuals

There are ways learners can legitimately use AI technologies like ChatGPT for academia. Integrating AI into academics could transform how students learn. Instead of using one style to teach all traditional educational systems, teachers can utilize AI tools to make education more enjoyable, efficient, and personalized.

Innovators are even creating apps that tailor lessons based on the qualities of each student - adjusting data collected about their performance and difficulties.

In practice, we imagine learners using AI tools to adjust coursework to their pace and capabilities. For example, math is one of the most dreaded subjects among students. We’ve all experienced the stress and horror of being stuck on a sum for hours. Now, imagine being able to simplify explanations and solve questions that consider your skill level so that you can build strong concepts.

Platforms such as Dreambox Learning are already using AI to adapt to students' responses and tailor their coursework accordingly. Similarly, Khan Academy, one of the most popular educational YouTube (News - Alert) channels, has embraced the AI revolution by launching its 24x7 personalized AI tutor, Khanmigo.

So, while students can deceive themselves with AI, the overall benefits of this technological advancement from an educational perspective cannot be ignored either.

Unique Assignments That Acknowledge AI

Some educators have also devised innovative ways to integrate AI into the classroom. Take, for example, Ethan Mollick, a professor at the Wharton School of the University of Pennsylvania. He knew he couldn’t stop his students from using ChatGPT, so he decided to work with it. He asked students to come up with ideas for assignments using ChatGPT!

Teachers can shift their approach and hand out assignments that guarantee no AI will be used. Tasks requiring the student to give their take on questions, including linking two fields or ideas, will require human intervention. Solving multiple choice questions that aren’t straightforward will require critical thinking too – and something AI may not be able to solve with contextual clues.

Instead of repeating factual data, teachers can also focus on tasks that promote analysis, creativity, and original thought. Conestoga College’s Timothy Main revealed that he’s had students submit assignments that read: “I had answers come in that said, ‘I am just an AI language model, I don’t have an opinion on that.”

The above instance proves that teachers now need a list of AI-proof ideas if they want to avoid regurgitated answers completely. They may also embrace AI tools by giving them homework specifically to generate prompts.

A Return to Paper Tests?

The COVID-19 pandemic forced students and professors to embrace online tests to cope with lockdown restrictions. While some teachers decided to hand out proctored MCQ tests, others opted for a subjective test where students must type out their answers.

Yet another approach was for students to write a pen-and-paper test at home and scan copies to send to the teachers for evaluation.

As mentioned earlier, there's not much a teacher can do to avoid using AI in academia, even with a checking tool. The risk of false positives and unwarranted punishment is always a possible outcome. For instance, a Texas A&M professor accused an entire class of using AI on their final assignments. The result was a false positive, and most of the class was eventually let off.

To avoid computers altogether, academia might be forced to bring back pen-and-paper tests, which ensure that students have an in-depth understanding of subjects at the end of the semester.

Or, they might have to accept the use of AI – and work alongside it.

The Moral Gray Area

So, when is it okay to use AI for education? Most educators agree that writing your assignments completely through generative AI is a big no-no. But, perhaps it could be encouraged as an effective research tool.

Instead of spending hours browsing Google (News - Alert)'s search results for the explanation you want, you could get it directly from ChatGPT phrased in a way you understand.

Basic questions—such as the definition of a neutralization reaction in chemistry or a simple C++ code—which act as the building blocks for advanced topics, are risky areas because it's easy for AI to develop them. That’s why the negotiation that needs to be carried out is whether it's okay to use AI to address these questions or to try and increase interpersonal interaction to eliminate them.

It's also perhaps why education today should focus more on developing critical thinking essays with contextual questions that AI could fumble on – or be easy to tell when someone did use it.

Learning AI in School

Conversely, schools could embrace AI and teach people how to use generative AI tools constructively. This skill could be non-negotiable in the future, just like computers were when they first arrived.

The University of Michigan already has a course on Advanced Artificial Intelligence that covers generative AI. And it won't be long before we see prompt generation in the coursework of middle schoolers, too.

Academia Must Scramble to Adjust to AI’s Capabilities

As we stand on the brink of this new educational frontier, the questions we face are not just about academic integrity or the fair use of AI; they're about the very essence of learning and development.

If future generations come to rely excessively on AI, entrusting it with the task of ideation and creation, what becomes of the essential human skills that have propelled us forward through history? Skills like improvisation, creativity, critical thinking, and problem-solving—are they at risk of atrophy in the shadow of AI's omnipresence?