TMCnet News

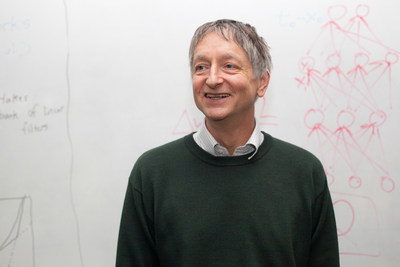

The BBVA Foundation bestows its award on the architect of the first machines capable of learning in the same way as the human brainMADRID, Jan. 17, 2017 /PRNewswire/ -- The BBVA Foundation Frontiers of Knowledge Award in the Information and Communication Technologies category goes, in this ninth edition, to artificial intelligence researcher Geoffrey Hinton, "for his pioneering and highly influential work" to endow machines with the ability to learn. The new laureate, the jury continues, "is inspired by how the human brain works and how this knowledge can be applied to provide machines with human-like capabilities in performing complex tasks."

Hinton (London, 1947) is Professor of Computer Science at the University of Toronto, and, since 2013, a Distinguished Researcher at Google, where he was hired after the speech and voice recognition programs developed by him and his team proved far superior to those then in use. His research has since sped up the progress of AI applications, many of them now making their appearance on the market: from machine translation and photo classification programs to speech recognition systems and personal assistants like Siri, by way of such headline developments as self-driving cars. Biomedical research is another area to benefit – for instance, through the analysis of medical images to diagnose whether a tumor will metastasize, or the search for molecules of utility in new drug discovery – along with any research field that demands the identifying and extracting of key information from massive data sets. For jury members Ramón López de Mántaras and Regina Barzilay, Hinton's research has sparked a scientific and technological "revolution" that has "astounded" even the AI community, whose members had simply not anticipated the sector advancing in such leaps and bounds. The deep learning field that owes so much to Hinton's expertise is described by the jury as "one of the most exciting developments in modern AI." Deep learning draws on the way the human brain is thought to function, with attention to two key characteristics: its ability to process information in distributed fashion, with multiple brain cells interconnected, and its ability to learn from examples. The computational equivalent involves the construction of neural networks – a series of interconnected programs simulating the action of neurons – and, as Hinton describes it, "teaching them to learn." "The best learning machine we know is the human brain. And the way the brain works is that it has billions of neurons and learns by changing the strengths of connections between them," Hilton explains. So one way to make a computer learn is to get the computer to pretend to be a whole bunch of neurons, and try to find a rule for changing the connection strengths between neurons, so it will learn things like the brain does." The idea behind deep learning, the new laureate continues, is to present the machine with lots of examples of inputs, and the outputs you would like it to produce. "Then you change the connection strengths in that artificial neural network so that when you show it an input it gets the answer right." The eminent scientist's research has focused precisely on discovering what the rules are for changing these connection strengths. For this, he affirms, is the path that will lead to "a new kind of artificial intelligence," where, unlike with other strategies attempted, "you don't program in knowledge, you get the computer to learn it itself from its own experience." Although their most important applications have emerged only recently, neural networks are far from being a new thing. When Hinton began working in artificial intelligence – spurred by a desire to understand the workings of the human brain that had initially taken him into experimental psychology – his colleagues were already moving away from the neural nets that he defended as the best way forward. The first results had not lived up to their promise, but still Hinton chose to persevere against the advice of his professor and despite his failure to raise the necessary research funding in his home country, the UK. His solution was to emigrate, first to the United States and subsequently Canada, where he was at last able to form a team and advance his work on neural networks; back then, in the 1980s, little more than a backwater in AI research. It was halfway through the first decade of the 21st century when the results came in that would draw scientists back to the network strategy. Hinton had created an algorithm capable of strengthening the connections between artificial networks, so a computer could "learn" from its mistakes. In the resulting programs, various layers of neural networks processed information step by step. To recognize a photo, for instance, the first layer of neurons would register only black and white, the second a few rough features … and so on until arriving at a face. In the case of artificial neural networks, what strengthens or weakens the connections is whether the information carried is correct or incorrect, as verified against the thousands of examples the machine is provided with. By contrast, conventional approaches were based on logic, with scientists creating symbolic representations that the program would process according to pre-established rules of logic. "I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way similar to the human brain," says Hinton. "That is the goal I have been pursuing. We are making progress, though we still have lots to learn about how the brain actually works." By 2009 there was no doubting the success of Hinton's strategy. The programs he developed with his students were soon beating every record in the AI area. His approach also gained mileage from other advances in computation: the huge leap in calculating capacity and the avalanche of data becoming available in every domain. Indeed many are convinced that deep learning is the necessary counterpoint to the rise of big data. Today Hinton feels that time has proved him right: "Years ago I put my faith in a potential approach, and I feel fortunate because it has eventually been shown to work." Asked about the deep learning applications that have most impressed him, he talks about the latest machine translation tools, which are "much better" than those based on programs with predefined rules. He is also upbeat about the eventual triumph of personal assistants and driverless vehicles: "I think it's very clear now that we will have self-driving cars. In five to ten years, when you go to buy a family car, it will be an autonomous model. That is my bet." There is a lot machines can do, in Hinton's view, to make our lives easier: "With all of us having an intelligent personal assistant to help in our daily tasks." As to the risks attached to artificial intelligence, particularly the scenario beloved of science fiction films where intelligent machines rebel against their creators, Hinton believes that "we are very far away" from this being a real threat. What does concern him are the possible military uses of intelligent machines, like the deployment of "squadrons of killer drones" programmed to attack targets in conflict zones. "That is a present danger which we must take very seriously," the scientist warns. "We need a Geneva Convention to regulate the use of such autonomous weapons." Bio notes: Geoffrey Hinton After completing a BA in Experimental Psychology at Cambridge University (1970) and a PhD in Artificial Intelligence at the University of Edinburgh (1978), Geoffrey Hinton (London, 1947) held teaching positions at the universities of Sussex (United Kingdom), and California San Diego and Carnegie-Mellon (United States), before joining the faculty at the University of Toronto (Canada). From 1998 to 2001, he led the Gatsby Computational Neuroscience Unit at University College London, one of the world's most reputed centers working in this area. He subsequently returned to Toronto where he is currently Professor in the Department of Computer Science. Between 2004 and 2013, he headed the program on Neural Computation and Adaptive Perception of the Canadian Institute for Advanced Research, one of the country's most prestigious scientific organizations, and since 2013 has collaborated with Google as a Distinguished Researcher on the development of speech and image recognition systems, language processing programs and other deep learning applications. Hinton is a Fellow of the British Royal Society, the Royal Society of Canada and the Association for the Advancement of Artificial Intelligence. He is also an Honorary Member of the American Academy of Arts and Sciences and the U.S. National Academy of Engineers. A past president of the Cognitive Science Society (1992-1993), he holds honorary doctorates from the universities of Edinburgh, Sussex and Sherbrooke. His numerous distinctions include the David E. Rumelhart Prize for outstanding contributions to the theoretical foundations of human cognition (2001) and the IJCAI Research Excellence Award (2005) of the International Joint Conferences on Artificial Intelligence Organization, one of the world's most prestigious in the artificial intelligence field. He also holds the Gerhard Herzberg Canada Gold Medal of the Natural Sciences and Engineering Research Council (2010) – considered Canada's top award for science and engineering – and the James Clerk Maxwell Medal bestowed by the Institute of Electrical and Electronics Engineers (IEEE) and the Royal Society of Edinburgh (2016). About the BBVA Foundation Frontiers of Knowledge Awards The BBVA Foundation has as its core objectives the promotion of scientific knowledge, the transmission to society of scientific and technological culture, and the recognition of talent and excellence across a broad spectrum of disciplines, from science to the arts and humanities. The BBVA Foundation Frontiers of Knowledge Awards were established in 2008 to recognize outstanding contributions in a range of scientific, technological and artistic areas, along with knowledge-based responses to the central challenges of our times. The areas covered by the Frontiers Awards are congruent with the knowledge map of the 21st century, in terms of the disciplines they address and their assertion of the value of cross-disciplinary interaction. Their eight categories include classical areas like Basic Sciences and Biomedicine, and other, more recent areas characteristic of our time, ranging from Information and Communication Technologies, Ecology and Conservation Biology, Climate Change and Economics, Finance and Management to Development Cooperation and the innovative artistic realm that is Contemporary Music. The BBVA Foundation is aided in the organization of the awards by the Spanish National Research Council (CSIC), the country's premier public research agency. As well as designating each jury chair, the CSIC is responsible for appointing the technical evaluation committees that undertake an initial assessment of the candidates put forward by numerous institutions across the world, and draw up a reasoned shortlist for the consideration of the juries. Information and Communication Technologies jury and technical committee The jury in this category was chaired by Georg Gottlob, Professor of Computer Science at the University of Oxford (United Kingdom) and Adjunct Professor of Computer Science at Vienna University of Technology (Austria). The secretary was Ramón López de Mántaras, a Research Professor at the Spanish National Research Council (CSIC) and Director of its Artificial Intelligence Research Institute. Remaining members were Regina Barzilay, Delta Electronics Professor of Electrical Engineering and Computer Science at Massachusetts Institute of Technology (United States), Liz Burd, Pro-Vice Chancellor in Learning and Teaching at the University of Newcastle (Australia), Oussama Khatib, Director of the Robotics Laboratory and Professor of Computer Science at Stanford University (United States), Rudolf Kruse, Professor in the Faculty of Computer Science at the University of Magdeburg (Germany), and Joos Vandewalle, Emeritus Professor in the Department of Electrical Engineering (ESAT) at Katholieke Universiteit Leuven (Belgium). The CSIC technical committee was coordinated by Ana Guerrero, the Council's Deputy Vice President for Scientific and Technical Areas, and formed by: Gonzalo Álvarez, Tenured Researcher in the Institute of Physical and Information Technologies (ITEFI); Carmen García, Research Professor in the Institute of Corpuscular Physics (IFIC) and Coordinator of the Physical Science and Technologies Area; Jesús Marco de Luca, Research Professor in the Institute of Physics of Cantabria (IFCA); Pedro Meseger, Research Scientist at the Artificial Intelligence Research Institute (IIIA); and Federico Thomas Arroyo, Research Professor in the Institute of Robotics and Industrial Computing (IRII). Calendar of upcoming award announcements

Previous awardee in this category The Information and Communication Technologies award in last year's edition went to mathematician Stephen Cook, for defining what computers can and cannot solve efficiently. Five of the 79 winners in earlier editions of the BBVA Foundation Frontiers of Knowledge Awards have gone on to win the Nobel Prize. Shinya Yamanaka, the 2010 Biomedicine laureate, won the Nobel Prize in Medicine in 2012; Robert J. Lefkowitz, awardee in the same Frontiers category in 2009, won the Chemistry Nobel in 2012. In Economics, Finance and Management, three Frontiers laureates were later honored with the Nobel: Lars Peter Hansen, winner of the Frontiers Award in 2010 and the Nobel Prize in 2013; Jean Tirole, Frontiers laureate in 2008 and Nobel laureate in 2014; and Angus Deaton, 2011 Frontiers laureate and Nobel laureate in 2015.

For more information, contact the BBVA Foundation Department of Communication and Institutional Relations (+34 91 374 5210/+34 91 374 3139/+34 91 374 8173, [email protected]) or visit www.fbbva.es

To view the original version on PR Newswire, visit:http://www.prnewswire.com/news-releases/the-bbva-foundation-bestows-its-award-on-the-architect-of-the-first-machines-capable-of-learning-in-the-same-way-as-the-human-brain-300392266.html SOURCE BBVA Foundation

|